Prompting for Content Assistant

| Table of contents |

This section offers tips and best practices for creating prompts for Content Assistant.

|

The effectiveness of the following list of tips is dependent on a variety of circumstances, such as the specific model you are using, your configuration, the task you want the model to perform, and many more. Keep in mind that any large language model is inherently unpredictable. Use its output with caution! |

Structuring your prompt

This section provides a step-by-step instruction to create one of many possible structures for a successful prompt and assumes default values for the sub-prompts Source Text and Single-Line.

You can add and edit operations in the Operations tab in the module configuration. Only the name of a given operation will be visible to the end-user not the corresponding prompt.

Operation without options

We provide a prompt example for an operation without options based on the following sample task: shorten text.

- As a first step, define a fitting role for the task at hand (this is referred to as role prompting). Ensure that the role is as specific as possible to improve the desirability of the output.

Example:

You are an expert in writing and text creation in any given language. - Tell the model where to expect input text, if applicable.

Example:

You will receive an input text at the end of this message.

...

Use the rest of this prompt as input:

<YOUR INPUT>

|

The Content Assistant already includes part of this message as one of the provided default values, namely “Use the rest of this prompt as input:” (not visible). You therefore do not have to manually add this to your prompt. Your input is automatically inserted at <YOUR INPUT>. |

- Break down complex tasks into smaller steps.

Example:

1. Read through the input text and understand what it is about.

2. Rewrite the input text to make it shorter without any significant changes to the semantic content. - Specify whether the order of the sub-tasks is important and what language they should be performed in. Optionally, you can ask the model to understand the tasks before getting started.

Example:

Perform the following tasks in strict order. Read through the tasks and understand them before you begin. Perform the tasks in the language of the input text:

1. …

2. … - Define what kind of output you expect from the model, e.g. if you want the model to explain its answer.

Example:

Output: You must only output the rewritten text. Do not add anything else to your answer.

The final prompt, which you manually enter, would look as follows:

You are an expert in writing and text creation in any given language.

You will receive an input text at the end of this message.

Perform the following tasks in strict order. Read through the tasks and understand them before you begin. Perform the tasks in the language of the input text:

1. Read through the input text and understand what it is about.

2. Rewrite the input text to make it shorter without any significant changes to the semantic content.

Output: You must only output the rewritten text. Do not add anything else to your answer.

Operation with options

Using an option in your prompt allows the Content Assistant end-user to choose a predefined value for a parameter that is integrated in your prompt before performing the defined operation on an input text. For example, when an end-user chooses to translate an input text, they are given the option to chose a predefined language to which the text should be translated.

The prompt for an operation with options should structurally look similar or equivalent to a prompt for an operation without options. Just as operations without options, it is defined in the Operations tab. Below you can find an example prompt for the “Translation” operation in which the end-user chooses a predefined value for the parameter [lang]:

Acts as a translation expert for any two given languages.

You will receive an input text at the end of this message.

Perform the following tasks in strict order. Read through the tasks and understand them before you begin:

1. Read through the text and understand what it is about.

2. Identify any textual characteristics of the text including but not limited to: brevity, coherence, flow, inclusivity, simplicity, unity, voice, tone, diction, punctuation, sentence structure, syntax, figurative language.

3. Translate the text to [lang] and ensure that the translation matches the semantics and textual features of the original text. Your translated text should sound natural.

Output: Output only the translated text. You must not add anything else to your answer.

If parameters are used in an operation prompt, they must always take the form of [<your parameter>].

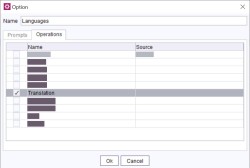

In the module configuration, you can add or edit an option by clicking the respective buttons in the Options tab. Options allow you to predefine values for a parameter used in an operation prompt. Below you can see the sample option “Languages”. The parameter [lang] can take on values that are predefined in the Prompts tab of the “Languages” option.

Note that in this tab, you can define an option name, in this case “Languages”, as well as names for the individual values, e.g. “German”. Both will be displayed to the end-user when using Content Assistant in the ContentCreator. You should thus ensure that your chosen names are descriptive.

If a parameter is used in the operation prompt, the value prompt for each value, in this example “English” and “German”, must include the assignment of said value to the parameter. This takes the form of [<your parameter>] = <your parameter value>. It is important to note that value name and the parameter value assigned in the value prompt do not have to be identical. However, the value name should be representative of the assigned parameter value.

Finally, you have to assign the option itself to an operation. Do this in the Operations tab by checking the corresponding operation. Below, you can see that the option “Languages” is assigned to the operation “Translation”.

Things to keep in mind

- Be as clear and concise as possible - longer prompts are not necessarily better prompts.

- Large language models can be sensitive to minor prompt changes. Even simple changes in formatting, such as the use of line breaks, might change the output.

- Always refer to one and the same entity with the same name, e.g. always use the term "input text" to refer to your input text; steer clear of using variations/synonyms in your prompt.

- In your prompt, use examples carefully as these might bias the model and lead to undesirable results. Using examples in your prompt is referred to as few-shot prompting.

- Prompting is an iterative process - it is highly likely that the first draft of your prompt will not be the final one. As you go through this process, analyze the model's output carefully to identify potential shortcomings in your prompt.

- Any model is trained on training data that is, while sometimes vast, limited with regards to the knowledge that it encompasses, e.g. ChatGPT 3.5 only includes data until 2021.

- Training data for any model might include biases that can be reproduced in the model's output and might make the output undesirable.

- Large language models can hallucinate. In other words, the model might produce incorrect or misleading information. Always verify information produced by large language models.